What Is Data Augmentation in Machine Learning?

In the field of machine learning, data augmentation increases the diversity of a dataset. It does all of this without requiring the collection of new data. Applying basic transformations such as rotation, flipping, and scaling to the original data increases the model’s performance.

This practice helps improve the model’s effectiveness and generalization. This strategy really excels when you’re working with smaller datasets. It improves the model’s performance by providing a more complex set of varied training samples.

For example, in image classification tasks, modifying images in minor ways may provide the model with a more comprehensive view of various angles. Data augmentation speeds up the learning process. It’s a great way to improve prediction accuracy through a more robust dataset.

It’s one of the most pragmatic solutions for getting the most out of our often-limited data resources.

What is Data Augmentation?

Define Data Augmentation

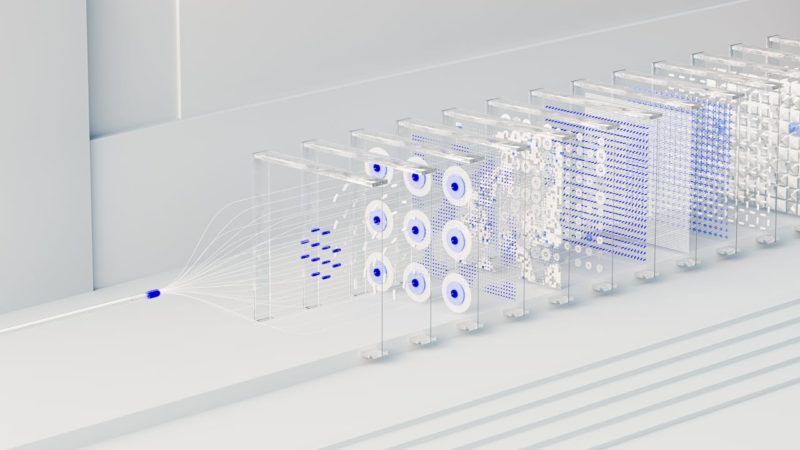

Data augmentation is a class of techniques that increase the size of training datasets by taking existing data and making changes to produce new examples. It consists of applying transformations on existing data to create new samples, therefore increasing the dataset size and variability.

This is especially important, as providing diverse examples to machine learning models allows them to generalize well to data they’ve never seen before. Data augmentation can be used on different types of data, including images and text.

For example, in image data, it could involve rotating, flipping, or shifting images to produce augmented versions of images. These modifications offer powerful ways to balance skewed datasets and improve model learning.

Role in Machine Learning

Data augmentation is an essential component in making deep learning models learn effectively. It leads to improved generalization to uncontrolled real-world data by ensuring a robust framework for training.

Conventional transformations, like simple flips and translations, help make models robust to these variations. Moreover, even with deep learning-based methods, there is a strong defense, particularly when working with unlabeled data.

Albumentations, for instance, supports bounding boxes and segmentation masks making generation of new images with different styles possible. The researchers have found that using multiple types of augmentation significantly improves outcomes.

The best combination is different depending on the dataset and the particular task you are doing. This smart, strategic approach is what makes sure models are able to capitalize on all data sources.

Importance of Data Augmentation

Data scarcity is a frequent bottleneck for machine learning projects. Unlike most techniques, it achieves an improvement in model performance by giving the model more training examples. By artificially expanding the dataset, it enhances the model’s capacity to generalize, allowing it to adapt better to different data conditions.

This increased ability to adapt leads to more accurate predictions overall, with a significant reduction in errors. Models trained with more data are strong enough to endure these heuristics for inference.

Enhance Model Performance

Data augmentation is key in getting state-of-the-art prediction accuracy. It helps models better adapt to various data situations occurring in the real world, ensuring less mistakes in outputs. Researchers are often using these techniques to adjust imbalanced datasets, boosting model performance on tasks such as object detection.

For example, synthetic data produced through reinforcement learning shows improved model performance.

Increase Data Diversity

Diverse datasets are incredibly important for training robust models. By intentionally introducing variations that depict real-world conditions, data augmentation assists models in learning patterns across diverse data. Methods including mix-up, which linearly combines input data, and synonymizing, which substitutes words with synonyms, increase data variety.

This increased diversity helps build a well-rounded understanding of data that is advantageous for many machine learning tasks.

Benefits of Data Augmentation

Data augmentation can truly be a powerhouse in machine learning, providing a multitude of benefits that ultimately improve model performance across all industries. By increasing the training set with numerous and varied adaptations, it strengthens model robustness and adaptability.

This process helps make certain that the resulting machine learning models are not only more accurate but more efficient in their training processes.

Improve Model Accuracy

Data augmentation is a key factor in increasing model accuracy. It gives models the opportunity to learn from a much broader range of scenarios, increasing accuracy rates right off the bat.

When models are trained on an incredibly diverse dataset, they are better equipped to perform in the real world. This results in better predictions.

Reduce Overfitting

Overfitting happens when models closely memorize training data, resulting in a decline in generalization. Data augmentation adds a layer of randomness that helps fight this problem.

With the addition of varied examples, models are better able to generalize, more skillfully handling new, unseen data. This is crucial for use cases in fast changing domains such as autonomous driving, where real-world uncertainty is ever-present.

Optimize Training Efficiency

Another major benefit is that with data augmentation, the dependency on massive labeled datasets is lessened, saving time and money while simplifying the process.

This efficiency reduces the time to deploy and iterate on models, which is critical for businesses looking to iterate at the speed AI innovation is occurring.

In conjunction with augmented data, companies can virtually recreate infinite real-world situations, making generative AI more valuable in industries such as finance and logistics.

Techniques for Data Augmentation

Machine learning is a field that is very thirsty for data. Data augmentation techniques can be seen as powerful tools to enrich datasets and subsequently increase model performance. These techniques can be broadly classified into two categories: traditional methods and advanced deep learning approaches. Each category provides distinct applications and advantages.

Traditional Methods

Classical data augmentation approaches involve transformations such as rotation, flipping, and scaling. Simple but powerful, these techniques are one of the most common ways to augment image datasets. For example, flipping images upside-down and left-to-right can add variations that ultimately allow models to learn more robust features.

Libraries such as Keras’ ImageDataGenerator take the hassle out of such processes. These approaches have achieved state-of-the-art results on a variety of image recognition tasks. They increase dataset size and variability, all without needing to collect new data.

Advanced Deep Learning Approaches

More sophisticated techniques use generative models and neural networks to learn to do more complex augmentations. These methods can generate new synthetic data, offering a promising method to augment datasets. Instance-level augmentation consists of copying labeled object segments from one image onto another.

This technique works especially well for pixel-wise tasks such as object detection. Mix-up augmentations, another state-of-the-art technique, are used to interpolate text strings to create new data compiles. What the research has found is that mixing different types of augmentation can significantly boost model performance. The optimal combination will vary based on the datasets and tasks being used.

Advanced Deep Learning Approaches

Generative Models

Generative models are systems that learn to generate new data instances that resemble the training data. They’re integral in the realistic images or text that they produce, and in massively augmenting training datasets. For example, Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) have gained popularity for the creation of synthetic datasets.

These models capture the complexity of real data, therefore providing a richer foundation for training downstream machine learning models. A real-world example includes employing GANs to create diverse and high-quality image datasets. This greatly improves the models’ ability to generalize across unseen data.

Neural Style Transfer

Done right, neural style transfer can be an impressive technique. It takes the style of one image and puts it on top of the content of another, creating beautiful, synthetic augmented data. This approach is particularly important when we consider artistic applications and creative economy.

It allows creators to experiment with new forms by combining different artistic styles. It introduces limitless creativity, equipping digital designers with tools to expand the frontiers of digital artistry.

Augmentation Networks

Augmentation networks are dedicated models specifically designed for data augmentation, with the purpose of learning the best augmentation strategies in the process of training. Beyond such theoretical considerations, they improve the quality of the generated data, increasing its utility for downstream applications.

These architectures can independently learn how to change saturation and brightness. They can use transformations such as RandomFlip, RandomRotation, and RandomZoom, increasing diversity in the dataset and increasing model performance in multiple domains.

Examples of Data Augmentation

Image Data Applications

Data augmentation really finds its footing in the world of image classification. Methods like horizontal (or vertical) flipping and rotation expansion increase training datasets with many different examples.

These techniques enable CNNs to learn from many different viewpoints, significantly improving their performance. Instance-level augmentation improves performance by copying labeled object regions from one image to another.

This technique is particularly beneficial for object detection and image segmentation tasks.

Audio Data Applications

For audio datasets, data augmentation is often what leads to state of the art machine learning models, using techniques such as pitch shifting and time stretching.

These techniques add noise to audio features, improving model performance on tasks such as speech recognition and music understanding. By changing sound frequencies or lengths, models can be better at handling differences in audio inputs.

Text Data Applications

For text data specifically, data augmentation includes methods such as synonym replacement and random insertion.

These approaches improve natural language processing (NLP) tasks by improving the robustness of language models. For instance, mix-up augmentations interpolate existing text strings, generating novel data that helps to better train models.

Video Data Applications

In action recognition on video, data augmentation involves frame skipping and temporal transformations.

These techniques are crucial for training better action recognition models, empowering them to better generalize to in-the-wild video inputs.

Sensor and IoT Data Applications

Creating variations in sensor data by adding noise helps simulate the unpredictability of real-world deployments.

This method is very important in predictive maintenance and anomaly detection, which makes the model more interpretable.

Tools for Data Augmentation

Popular frameworks such as TensorFlow and PyTorch make it easy to implement data augmentation.

Libraries like Augmentor or the torchvision.transforms module in PyTorch provide a wide range of ready-to-use transformations for easy integration.

Challenges of Data Augmentation

One of the biggest challenges of data augmentation is ensuring data integrity. If you’re modifying images or text to generate new data samples, pay close attention to retaining the original characteristics. These very features are what make the data valuable.

Using the example of rotation again, if a rotation technique is not implemented wisely, the resulting image may distort crucial features. This is where domain expertise is key. Developing an understanding of the specific nuances of the dataset goes a long way in deciding which augmentation techniques to implement.

For example, in medical imaging, when dealing with sensitive attributes, even small perturbations can create unrealistic data, which damages the model’s performance.

Another challenge is the danger of over-augmentation. Over augmenting with variations that are not realistic and relevant can create noise in the data and potential confusion in the learning process.

It’s a fine line to walk to improve the dataset without departing from real-world conditions.

Potential Limitations

To mitigate these challenges, implementing strategies such as cross-validation and intentional selection of augmentation techniques are necessary. Testing augmented data thoroughly is crucial to make sure that any enhancements lead to better model performance.

By continuously monitoring models after augmentation, it’s possible to catch any decline in accuracy and make changes accordingly.

Addressing Common Issues

Figuring out the best combination of these techniques is usually task and dataset dependent. Though several augmentations can be helpful, they can be costly from a computation standpoint, particularly with large datasets.

Evaluating the effectiveness of augmentation is very important. You have to balance the quality of the original data with the task requirements.

Conclusion

Data augmentation plays a key role in deep learning by increasing the diversity of training dataset, improving the performance, and increasing the accuracy. This technique offers unprecedented opportunities to improve outcomes in industries such as health, technology and retail.

Data augmentation processes like flipping, rotating, and scaling images allow models to learn better. Open-source tools make data augmentation easier than ever before, allowing developers to train smarter algorithms. Although challenges such as greater computation and the need for more thoughtful implementation exist, the advantages greatly exceed the disadvantages.

As the field continues to advance at an unprecedented pace, data augmentation will continue to be an important factor in machine learning breakthroughs. Adopt these tools and techniques to propel your projects to success and remain at the forefront in this rapidly changing technological environment.

Frequently Asked Questions

What is data augmentation in machine learning?

Data augmentation is a powerful technique to make your training dataset more diverse. It’s a process that includes generating altered copies of the original data through various transformations such as rotation, cropping and flipping.

Why is data augmentation important?

Data augmentation is an incredibly important tool for achieving better model performance. Data augmentation is an effective technique to avoid overfitting by offering a wider range of training examples, improving the model’s generalization capability.

What are the benefits of data augmentation?

Data augmentation helps to increase the size of the dataset without having to collect more data. It increases both accuracy and robustness of the model by adding randomness, a key ingredient to any complex task such as image recognition.

What are some common techniques for data augmentation?

Typical augmentation methods for image data are to apply random transformations, such as rotating, scaling, or flipping the image. The state of the art techniques include deep learning techniques including generative adversarial networks (GANs) and neural style transfer.

What tools are used for data augmentation?

TensorFlow’s ImageDataGenerator, Keras, and PyTorch are popular tools. These libraries provide built-in functionalities for applying several data augmentation techniques.

What are the challenges of data augmentation?

Challenges lie within keeping data integrity when changing format and making sure augmented data stays feasible for use. Finding the right balance between diversity and relevance is essential for good augmentation.

Would you like to receive similar articles by email?